Discover more from The Pull Request

Hunting predators

How Apple is looking at the photos on your phone, and how this moral necessity could go wrong

It would be better for him if a millstone were hung around his neck and he was thrown into the sea, than to offend one of these little ones.

-Luke 17:2

Just last week Apple announced they’re shipping a system that automatically scans the photos on your iPhone for Child Sexual Abuse Material (CSAM), the euphemistic term of art for kiddie porn. This caused a frisson of alarm among the privacy commentariat, including even a Twitter thread from Facebook’s product head for WhatsApp, Will Cathcart, denouncing the plan.

As much as I’ve historically been against the so-called ‘content moderation’ by the tech giants, and have every reason in the world to dislike Apple, I found myself greeting the news with more of a shrug, or even some applause.

For starters, none of this is new. Facebook, for example, has been automatically scanning your photos for CSAM for ages, and very successfully at that. In my memoir Chaos Monkeys, I described the time I headed up the team responsible for policing Facebook Ads and was drawn into the wider efforts to programmatically stop bad guys, whether sketchy marketers or child predators:

Our social shadow warriors did have one showcase: there was an internal Facebook group with the provocative name of “Scalps@Facebook.” It was essentially an online trophy case of taxidermied delinquents, the sexual predators, stalkers, and wife beaters whom the FB security team, in conjunction with law enforcement, had managed to catch. The weird thing about it was that the posts featured profile photos of the alleged criminals, along with somewhat gloating accounts of how the “perp” had been hunted down, and which local law enforcement agencies had collaborated. And so Facebook employees would randomly see in their feed the guilty face of depraved desire: some guy in the Philippines or Arkansas, and his rap sheet about trying to induce fourteen-year-old girls to meet him, along with a line or two indicating he had been summarily dispatched into the maw of the legal system.

That’s right: Facebook has caught thousands upon thousands of child sexual predators, and does so very aggressively (I speculate as to why they don’t make a bigger deal about it in that same passage).

So what’s different about Apple’s efforts? Well, the technical implementation is very different from what Facebook and other companies do. For starters, it’s happening on-device rather than in the cloud, which seems to provoke a level of privacy protectiveness in consumers. As I’ve described in prior posts, the entire privacy model for user data—not just ads, but all of it—is moving to on-device storage and computation. That’s just how your user data will get used in the future, and this plan is no different.

Which doesn’t mean it’s perfect. In this post, I’m going to dissect the consumer-relevant aspects of the plan and ponder the real-world tradeoffs. Before that though, a brief aside for the privacy absolutists who might be sputtering in rage at the mere suggestion…

My dear libertarians and privacy absolutists

For those about to launch into a brave but principled defense of privacy rights despite the needs of law enforcement, let’s take a reality check, shall we?

I present to you the case of United States v. Hogle.

Christian Hogle was the owner of Wild Island Café on Orcas Island, a business I often frequented when I lived there (great bagels). Married with family and kids in the local school, he was your typical pillar-of-the-community type. He was also a pedophile who trafficked in child pornography on Telegram, as the community discovered when FBI agents swept into his cafe and arrested him one morning in 2018. Since he was a local, I looked up the (public) Federal indictment to see what they had on him. Much to my shock and disgust, unversed in these legal matters as I am, it’s apparently the case that arresting agents put explicit details—down to the most heinous and sordid details about the pornography itself—in the legal complaint to highlight the criminality at work.

Goes without saying, every trigger warning in the universe applies and your soul might not emerge intact if you read the legal filings. Thus warned, if you want to read about what we’re dealing with here, I’ll save you the PACER lookup and fees: here it is.

Read that, principled libertarian? Take in all the details? Can you imagine your child or your beloved niece or nephew in the hellish situations described? Has the conversation moved on from privacy absolutism to what form of public execution we’re going to institute for those guilty—beyond the shadow of a doubt—of producing and distributing this material?

I thought that might happen. On with the discussion.

The Apple proposal

The normie high-level take is here, and the technical white-paper is here.

We’ll be discussing the latter.

There’s a lot of fancy cryptography in the scheme, part of it to make sure the user is protected from Apple having access to photos that do not set off alarms, but we’ll skip that as that’s not really the potentially problematic part. It’s good Apple thought of that, but if we’re talking about what’s going to create scandal-ridden media cycles a year from now, it’s not this ‘security voucher’ business.

Let’s focus on the part that will (almost certainly) be the subject of New York Times hysterics in the near future, which is how images get matched to begin with.

First, some technical preliminaries. Pull Request readers are probably familiar with the computer-science notion of a hash function, but it’s worth reviewing both for those who aren’t and also to distinguish that notion from the ‘image hashing’ that Apple is doing.

A hash function is a very commonly used mathematical tool that takes just about any input—text, images, files, anything that can be represented in bits—and maps it to a fixed-length range of output values. For example, let’s invent a toy hash() example that takes strings and maps them to numbers ranging from zero to a million. So, let’s say hash(‘antonio’) = 5893451. To work properly, a hash must be irreversible, meaning if I’m given the output 589345 alone, I have no clue as to what the input was to generate this, and the only way I can figure it out is via brute-force feeding of all inputs into hash() until I get lucky. That’s prohibitively hard for any domain of inputs in real-world hash functions.

Secondly (and we’re defining this feature only to remove it shortly), the output of hash() must be random across its outputs, such that any input is just as likely to map to any output, even inputs that are similar. So for example, hash(‘antonia’) = 000435, which is completely unlike the output for ‘antonio’. Thus given a set of outputs of hash(), even knowing a few input/output pairs doesn’t help me reverse the hash of an unknown input string. It’s a purely one-way function: data goes in and can be compared, but no data is revealed thereby.

The net result is that if I want to tell whether piece of data X is the same as piece of data Y, I can just store the hash of Y and calculate the hash of X, and determine equality that way. The checker need never look at X itself; if we don’t trust the checker (e.g. Apple), this is a big plus.

(In case you’re thinking, oh, that would be a cool way to check passwords on login without having to move around the actual password, that’s exactly how authentication works, when properly designed. Unlike when Facebook screwed up bigly.)

Great, so hashing is a fairly secure way of checking data equality without sharing the data itself. Wonderful!

The police deliver a sharp knock on the door, or even a kick, everywhere from authoritarian Cuba to the democratic West. The difference is between the extrajudicial flex of raw power in one case, and an armed emissary, anointed by a disinterested judiciary, carrying out codified popular will in the form of law. Same method, different morality.

But what about when you want a ‘fuzzier’ match to the data in question? In other words, you don’t want exact bit-by-bit equality, but actually want to sift out data X that’s kinda, sorta like Y, i.e., you actually want hash(‘antonia’) to be close to hash(‘antonio’). Something like hash(‘antonia’) = 589347. Then, if you define a ‘distance’ in the hashed number range, you could filter things lexically close to ‘antonio’ (e.g. strings like ‘antonii’ and ‘entonio’ that also hash to nearby numbers).

This would be a somewhat ham-handed way of defining lexical distance, since text (thanks to the underrated miracle of an alphabet) is a one-dimensional sequence of finite elements. There are easier ways to define a distance between strings. Rich media like images or music however are much more complex; there, finding some magical function that maps a world of sights and sounds to a single number that correctly judges the human experience of that media is a worthwhile challenge.

Here we have so-called ‘perceptual hashes’: a function that mimics the human perception of complex media and organizes them into a one-dimensional space of proximity. This is the core of the Apple approach to CSAM, and it’s not novel either. PhotoDNA was developed by Microsoft over a decade ago, and has been used extensively to combat CSAM since then. I was first acquainted with it at Facebook, where it was used to filter out sketchy ad creative. Your ad for beauty products featuring a nude woman got blocked by the Ads Integrity team? Just add a one-pixel border in black and BOOM! any bitwise equality filter will fail and suddenly your ad creative (or CSAM) would be live again. But if you use a perceptual hash that understands that the hacked pixels are barely perceived, your ads are rejected (and Mr. Hogle ends up in some dark oubliette where he belongs).

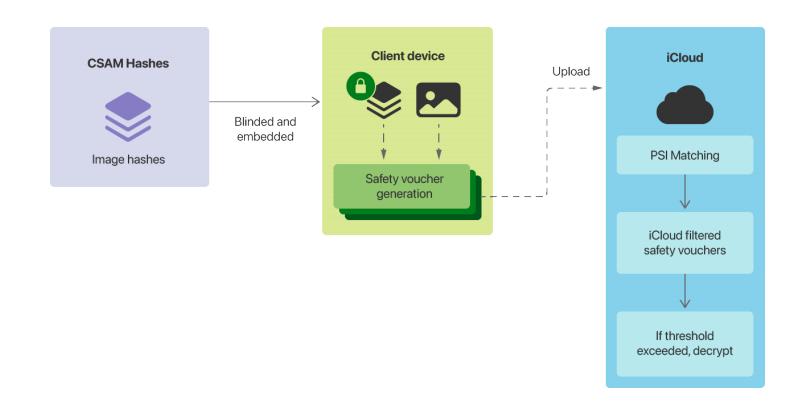

Which brings us to the Apple plan. Here’s how it works (again, skipping over some of the wonky cryptographic detail and the complex device/server interaction):

Apple hashes a database of known CSAM from organizations like NCMEC (no, Apple doesn’t keep a CSAM gallery around).

The (irreversible and encrypted) hashes are placed on your phone, and compared to hashed version of photos you’re uploading to iCloud.

If you’ve got more than a threshold number of images that match, you trigger alarm bells and your images are decrypted for human inspection and possible flagging to law enforcement.

If you don’t match images beyond the threshold, Apple can see nothing and nothing happens.

That’s it. So where can this go wrong?

In step (3) is where. A ‘collision’ between the perceptual hash of your photo and a known CSAM image is where the specter of enforcement begins. That hashing, like any classification algorithm, will have a ‘false positive’ rate, where images are considered as being fuzzily equivalent when they’re really not. This might be easier to trigger than one might think.

What’s every new parent have on their phone? Hundreds of photos of a naked newborn is what. Will some subset of those, as repellent as the thought might be to a parent, match to a database of CSAM given the nature of image hashing? Well, we’re about to find out, but I’d be surprised if they didn’t with a large enough input set of users and photos.

In Apple’s docs (linked above), they claim a ‘1 in 1 trillion’ chance of a false match. Color me skeptical. No false positive rate on anything is that low. And however low it might get, multiplied times Internet scale you’re bound to have a few cases. Which is why Facebook, which had a pretty efficient porn and CSAM detection machine, would still get it wrong and take down photos of nursing mothers. In the Facebook case, it caused nothing but a momentary media cycle and some motherly hysterics2, but in CSAM policing, the downstream implications might be rather more severe.

Picture the FBI CSAM task force kicking in the door of some obsessive father with 10,000 baby pics on his phone. Or imagine some cretin ‘swatting’ someone by WhatsApping them photos of known CSAM hash collisions; those photos get default saved to the device, and if configured thusly, backed up to iCloud. Suddenly, the FBI is kicking down your door for something someone sent you. Or imagine privacy zealots intentionally finding such hash collisions and uploading them to iCloud en masse, in an attempt to overwhelm the detection system. That could render the debate almost moot.

Apple claims that the inevitably non-zero false-positive rate is mitigated by the thresholding scheme whereby only a certain number of matches triggers law enforcement. But how do they know what the net real-world false-positive rate is for their system? They have no way of accurately modeling the correlation of matches among billions of users and trillions of photos until they ship their system in production, whatever their assurances now. The only real safeguard is that they’ll have humans review the final matches: the system makes it possible for Apple to see your photos once the alarm is triggered. One hopes that keeps some overzealous parent out of jail.

Waving the product-manager magic wand

A thought experiment I’d often perform when emcee’ing some product powwow among a mixed group of engineers and business types is imagining there was a magic wand that let you build anything you wanted. This focuses the conversation around what actually needed to get built, rather than getting bogged down (momentarily) in the engineering and business specifics that often waylaid discussion.

Let’s do that now: What legal and privacy regimen should rule in a world of infinite photos on infinite devices where some small subset is being used for a heinous crime? To remind ourselves of the going standard, here’s the Fourth Amendment:

The right of the people to be secure in their persons, houses, papers, and effects, against unreasonable searches and seizures, shall not be violated, and no Warrants shall issue, but upon probable cause, supported by Oath or affirmation, and particularly describing the place to be searched, and the persons or things to be seized.

Seems sensible enough, when every piece of information was on some actual analog piece of paper in some physical desk or room. What’s 4A mean in an iPhone world? Should we expect a judge’s warrant every time Apple or law enforcement nose around your photos to see if you’ve got CSAM? Should we subject the Apple match-thresholding scheme to the conventional rigors of ‘probable cause’? By that standard, a lot of Christian Hogles will live unperturbed lives of child predation, facilitated by the frictionless sharing the internet makes possible.

Critics of the Apple approach, given recent examples of tech companies overreaching their oversight, warn of the inevitable ‘slippery slope’ that such technology presents. A way to check your photos against a database of content means Apple could conceivably start policing not just such inarguably vile stuff as CSAM, but whatever cheeky (but legal) 4Chan memes you’ve got on your phone, all in the name of ‘harm reduction’ or whatever regnant moral panic. It’s not a wild suggestion to think that this enforcement mechanism, like all such mechanisms, could fall into the wrong hands and usher in tyranny by way of nominally good intentions.

The police deliver a sharp knock on the door, or even a kick, everywhere from authoritarian Cuba to the democratic West. The difference is between the extrajudicial flex of raw power in one case, and an armed emissary, anointed by a disinterested judiciary, carrying out codified popular will in the form of law. Same method, different morality.

As with so many other problems that technology creates, what seems like a wonky technical issue actually boils down to an eminently human one: how to restrain and channel power toward morally good ends. And the greater the power in question—in this case, the all-seeing eyes of sophisticated algorithms—the more complex the restraints need to be. We’re not in the era of deskbound ‘personal papers’ and VHS tapes in the back of closets anymore; neither should our laws or our methods of enforcement.

Those who want to see a real hash function in action (and are on a Mac), open your ‘Terminal’ application, and type ‘md5 -s antonio’ to see what my name really hashes to.

Random working-at-Facebook anecdote: I pull up one day to One Hacker Way, ready for another day in the tech trenches, and there’s a mob of nursing women in front of Facebook’s Building 10. What the hell was going on? There was a so-called ‘nurse in’ to protest Facebook’s obviously malicious (really just mistaken) take-down of nursing photos. Zuck hates babies and motherhood, don’t you know? False positives have consequences…

Subscribe to The Pull Request

Technology, media, culture, religion and the collisions therein, from your utterly basic Cuban Jewish writer-technologist.

brilliant article. Solid explanation of what a one way hash is.